Why it matters that Pydantic v2 is written in Rust Link to heading

If you’ve worked with any form of data wrangling in Python, you’ve likely heard of Pydantic. If not, welcome! In this post, I’ll show an example of why Pydantic is so integral to the workflows of data scientists and engineers, and how just a few small changes to your existing code written in v1 can be changed as per v2, to obtain a 5x speedup, largely due to Pydantic’s internals being rewritten in Rust.

What is Pydantic and why would you use it? Link to heading

Yes, compiled language enthusiasts, we hear you. Python, unlike compiled languages (C, C++, Rust), is an interpreted, dynamically typed language where variables can change in type during run time. This means that when you write Python code, especially for large code bases involving ETL & data transformations, it becomes necessary to test for a lot of edge cases. A whole class of errors (TypeError) gets introduced into your Python runtime, where data obtained downstream may not align with expectations based on logic you or other developers put in, upstream.

Python 3.5 introduced type hints, which allows developers to declare what type a variable should be, prior to runtime. However, type hints are only marginally effective because they don’t enforce data types at runtime – they’re only a guide, and not a guarantee that the data you pass around remains a certain type. What’s the solution to this? Enter Pydantic 😎.

As per their docs, Pydantic is a data parsing, transformation and validation library that leverages the underlying information in Python type hints and adds additional checks and constraints via a schema, so that any untrusted data you obtain is transformed and validated to conform to the known schema during runtime. If the incoming data isn’t of the expected type or quality, Pydantic very loudly and explicitly throws an informative error, helping developers more easily trace down bugs or missing data prior to major deployments in production. Using Pydantic reduces the number of tests required, eliminating entire classes of bugs, streamlining the development process and ensuring that complex Python workflows run more reliably in production.

As stated in their docs1, Pydantic is a highly used library and is downloaded >70 million times a month – it is used by 20 of the 25 largest companies on the NASDAQ, as well as all the biggest tech companies in the world. Clearly, ensuring type stability and cleaner data upstream, offers a TON of value and can save a lot of wasted compute time in production! When you think of the sheer volume of data being handled at the largest companies on earth (most of them involving Python, due to its heavy use in data science and ML), it makes sense why Pydantic has become so important.

Pydantic v2: A new core 🦀 Link to heading

Normally, the release of a new major version of a library is just water under the bridge, and life goes on as normal. However, it’s because of where exactly Pydantic sits in the value chain – at the foundation of a host of data wrangling and ETL workflows – and the fact that its core functionality is now written in a systems language like Rust, that makes v2 so important. If you think about it, modern software engineering and machine learning workflows rely on large batches or streams of data coming in, and it’s a well-known fact that data quality can impact multiple outcomes of a software product2, all the way from customer engagement to insight generation and, ultimately, revenue output.

Why Rust? Link to heading

I’ve already written about this in my other blog post on how Rust has been transforming the PyData ecosystem. Rust, being a close-to-bare-metal systems language, allows developers to build highly performant and memory-safe tooling for Python users with a lot more ease than they could in other languages. This is done via PyO3, a tool that allows developer to create Rust bindings for the Python interpreter, fully leveraging the underlying power and expressivity of Rust. Since 2022, PyO3 has been having more and more of an impact on the PyData ecosystem, and the lead developer of Pydantic, Samuel Colvin, has spoken3 about the importance of the interplay between Python 🐍 and Rust 🦀, and the kind of impact this can have on the PyData ecosystem in general.

How does Pydantic v2 do its magic? Link to heading

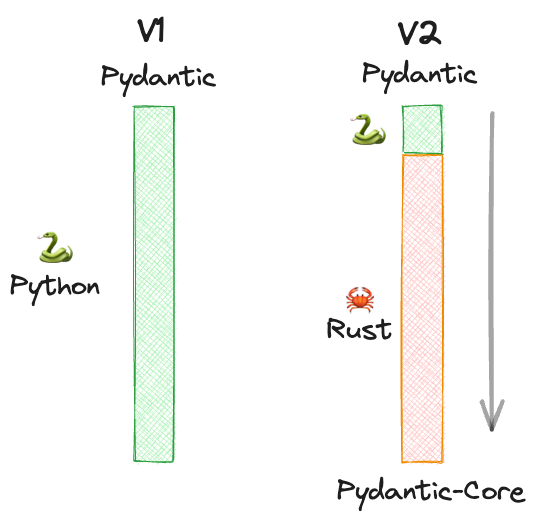

As of v2, Pydantic is now divided into two packages:

pydantic-core: Contains Rust bindings for the core validation and serialization logic, and doesn’t have a user-facing interfacepydantic: A pure Python, higher level, user-facing package

When you write your validation logic in Python via Pydantic v2, you’re simply defining “instructions” that are pushed down to the pydantic-core Rust layer. Samuel Colvin, creator of Pydantic, explains4 how a large part of the performance gains are achieved:

- Compiled Rust bindings means all the validations are happening outside of Python

- Recursive function calls are made in Rust, which have very little additional overhead

- Using a tree of small validators that call each other, making code easier to read and extend without harming performance

Case study on Pydantic v1 vs v2 Link to heading

Okay, enough of background, let’s see some code!

The data Link to heading

The example dataset we’ll be working with is the wine reviews dataset from Kaggle. It consists of 130k wine reviews from the Wine Enthusiast magazine, including the variety, location, winery, price, description, and some other metadata for each wine. Refer to the Kaggle source for more detailed information on the data and how it was scraped. The original data was downloaded as a single JSON file. For the purposes of this blog post, the data was then converted to newline-delimited JSON (.jsonl) format where each line of the file contains a valid JSON object.

An example JSON line is shown below.

{

"id": 40825,

"points": "90",

"title": "Castello San Donato in Perano 2009 Riserva (Chianti Classico)",

"description": "Made from a blend of 85% Sangiovese and 15% Merlot, this ripe wine delivers soft plum, black currants, clove and cracked pepper sensations accented with coffee and espresso notes. A backbone of firm tannins give structure. Drink now through 2019.",

"taster_name": "Kerin O'Keefe",

"taster_twitter_handle": "@kerinokeefe",

"price": "30.0",

"designation": "Riserva",

"variety": "Red Blend",

"region_1": "null",

"region_2": null,

"province": "Tuscany",

"country": "Italy",

"winery": "Castello San Donato in Perano"

}

The benchmark Link to heading

This benchmark consists of the following data validation tasks:

- Ensure the

idfield always exists (this will be the primary key), and is an integer - Ensure the

pointsfield is an integer - Ensure the

pricefield is a float - Ensure the

countryfield always has a non-null value – if it’s set asnullor thecountrykey doesn’t exist in the raw data, it must be set toUnknown. This is because the use case we defined will involve querying oncountrydownstream - Remove fields like

designation,province,region_1andregion_2if they have the valuenullin the raw data – these fields will not be queried on and we do not want to unnecessarily store null values downstream

Note on timing Link to heading

When running benchmarks, it makes sense to use a convenience library like codetiming. This makes it easier to wrap the portions of the code we want to time without having to add/remove time() commands over and over.

Schema Link to heading

The schema file is at the root of the validation logic in Pydantic. The general practice is to use validators, which are a special kind of class method in Pydantic, to transform or validate data and ensure it’s of the right type and format for downstream tasks. Using validators is far cleaner than writing custom functions that manipulate data (they would be riddled with ugly if-else statements or isinstance clauses). The schema below implements the validation logic we defined above. To see the differences in code between Pydantic v2 and v1, switch between the tabs shown below.

# Python 3.10+ syntax

from pydantic import BaseModel, model_validator

class Wine(BaseModel):

id: int

points: int

title: str

description: str | None

price: float | None

variety: str | None

winery: str | None

designation: str | None

country: str | None

province: str | None

region_1: str | None

region_2: str | None

taster_name: str | None

taster_twitter_handle: str | None

@model_validator(mode="before")

def _remove_unknowns(cls, values):

"Set other fields that have the value 'null' as None so that we can throw it away"

fields = ["designation", "province", "region_1", "region_2"]

for field in fields:

if not values.get(field) or values.get(field) == "null":

values[field] = None

return values

@model_validator(mode="before")

def _fill_country_unknowns(cls, values):

"Fill in missing country values with 'Unknown', as we always want this field to be queryable"

country = values.get("country")

if not country or country == "null":

values["country"] = "Unknown"

return values

@model_validator(mode="before")

def _get_vineyard(cls, values):

"Rename designation to vineyard"

vineyard = values.pop("designation", None)

if vineyard:

values["vineyard"] = vineyard.strip()

return values

# Python 3.10+ syntax

from pydantic import BaseModel, root_validator

class Wine(BaseModel):

id: int

points: int

title: str

description: str | None

price: float | None

variety: str | None

winery: str | None

designation: str | None

country: str | None

province: str | None

region_1: str | None

region_2: str | None

taster_name: str | None

taster_twitter_handle: str | None

@root_validator

def _remove_unknowns(cls, values):

"Set other fields that have the value 'null' as None so that we can throw it away"

fields = ["designation", "province", "region_1", "region_2"]

for field in fields:

if not values.get(field) or values.get(field) == "null":

values[field] = None

return values

@root_validator

def _fill_country_unknowns(cls, values):

"Fill in missing country values with 'Unknown', as we always want this field to be queryable"

country = values.get("country")

if not country or country == "null":

values["country"] = "Unknown"

return values

@root_validator

def _get_vineyard(cls, values):

"Rename designation to vineyard"

vineyard = values.pop("designation", None)

if vineyard:

values["vineyard"] = vineyard.strip()

return values

Differences in code between v2 and v1 Link to heading

There are only a couple of areas where we need to update the code based on the differences between v1 and v2 of Pydantic.

- In Pydantic v2,

root_validatoris deprecated, and we instead usemodel_validator- In a similar way,

validatoris deprecated and we instead usefield_validatorwhere we may need to validate specific fields (not used in this example)

- In a similar way,

- The keyword arguments to the

model_validatordiffer from v1’sroot_validator(and in my view, have cleaner, more intuitive names)mode="before"here means that we are performing data transformations on the fields that already exist in the raw data, prior to validation

That’s it! With just these minor changes (everything else is kept the same) in the schema definition, we are now ready to test their performance!

Run benchmark Link to heading

Set up two separate Python virtual environments, one with Pydantic v2 installed, and another one with v1 installed. Each portion of the code base is explained in the following sections.

Read in data as a list of dicts Link to heading

The raw data is stored in line-delimited JSON (gzipped) format, so it is first read in as a list of dicts. The lightweight serialization library srsly is used for this task: pip install srsly

from pathlib import Path

from typing import Any

import srsly

def get_json_data(data_dir: Path, filename: str) -> list[JsonBlob]:

"""Get all line-delimited files from a directory with a given prefix"""

file_path = data_dir / filename

data = srsly.read_gzip_jsonl(file_path)

return data

if __name__ == "__main__":

DATA_DIR = Path("../data")

FILENAME = "winemag-data-130k-v2.jsonl.gz"

data = list(get_json_data(DATA_DIR, FILENAME))

# data is now a list of dicts

Define validation function Link to heading

- Define a function that imports the

Wineschema defined above (separately for v1 and v2 of Pydantic). - The list of dicts that contain the raw data are unwrapped in the

Winevalidator class, which performs validation and type coercion - Note the change in syntax for dumping the validated data as a dict (in v1, the

dict()method was used, but in v2, it’s renamed tomodel_dump).- The

exclude_none=Truekeyword argument tomodel_dumpremoves fields that have the valueNone– this saves space and avoids us storingNonevalues downstream

- The

from schemas import Wine

def validate(data: list[JsonBlob]) -> list[JsonBlob]:

"""Validate a list of JSON blobs against the Wine schema"""

validated_data = [Wine(**item).model_dump(exclude_none=True) for item in data]

return validated_data

from schemas import Wine

def validate(data: list[JsonBlob]) -> list[JsonBlob]:

"""Validate a list of JSON blobs against the Wine schema"""

validated_data = [Wine(**item).dict(exclude_none=True) for item in data]

return validated_data

Define a run function that wraps a timer Link to heading

To time each validation cycle, we wrap the run function inside a Timer context manager as follows. Note that this requires that the codetiming library is installed: pip install codetiming.

from codetiming import Timer

def run():

"""Wrapper function to time the validator over many runs"""

with Timer(name="Single case", text="{name}: {seconds:.3f} sec"):

validated_data = validate(data)

print(f"Validated {len(validated_data)} records in cycle {i + 1} of {num}")

Run in a loop Link to heading

We can then call the run() method in a loop to perform validation 10 times, to see the average run time. To measure the total run time for all 10 cycles, wrap the loop itself inside another Timer block as follows:

num = 10

with Timer(name="All cases", text="{name}: {seconds:.3f} sec"):

for i in range(num):

run()

Results Link to heading

| Run | Pydantic v1 | Pydantic v2 |

|---|---|---|

| Cycle 1 | 2.952 sec | 0.584 sec |

| Cycle 2 | 2.944 sec | 0.580 sec |

| Cycle 3 | 2.952 sec | 0.587 sec |

| Cycle 4 | 2.946 sec | 0.576 sec |

| Cycle 5 | 2.925 sec | 0.578 sec |

| Cycle 6 | 2.926 sec | 0.582 sec |

| Cycle 7 | 2.927 sec | 0.575 sec |

| Cycle 8 | 2.921 sec | 0.582 sec |

| Cycle 9 | 2.950 sec | 0.590 sec |

| Cycle 10 | 2.926 sec | 0.582 sec |

| Total | 29.585 sec | 6.032 sec |

Why did v2 perform so much better? Link to heading

- Our v1 validation logic involved looping through dictionary key/value pairs and performing type checks and replacements in Python

- In v2 of Pydantic, all these operations, which are normally rather expensive in Python (due to its dynamic nature), are pushed down to the Rust level

- None of the loops in the validator expressions are actually run in Python, explaining why without any major code changes, we see a large gain in performance

In many real world scenarios, the validation logic implemented in Python (due to business needs) can get rather complex, so the fact that library developers can leverage the power of Rust and reuse code this way, is a huge deal.

Conclusions and broader implications Link to heading

The timing numbers shown in this case study were for validation only. In a full-blown ETL workflow in production, it’s likely that there are many such scripts working on data far larger than 1 million records. It’s easy to imagine that, when adding up the number of validations performed in Python at all the FAANG-scale companies (who really do use Pydantic in production), we would get a number in the trillions, purely due to the scale of data and the complexity of tasks being performed at these companies.

In 2019, Amazon’s AWS announced official sponsorship to the Rust project. AWS is now among the core contributors to the development of the Rust language, and for good reason: in 2022 AWS published a blog post highlighting how large-scale adoption of Rust at the core of compute-heavy operations can reduce energy usage in data centres worldwide5 by almost 50%! 🤯 As per data available in 20206, data centres consume about 200 terawatt hours per year globally, which amounts to around 1% of the total energy consumed on our planet. It’s natural that with the frenzy of activity in AI and cloud computing these days, energy utilization in data centres will only grow with time, so any reduction in energy consumption via more efficient bare-metal operations has massive consequences!

Projects like Pydantic that perform core lower-level operations in Rust while allowing higher-level developers to build rapidly can have a huge impact on reducing energy consumption worldwide.

There are many other projects also going down the road of building faster, more efficient Python tooling on top of Rust, but projects like Pydantic, which were among the first to do so, will surely serve as an inspiration to the community at large. 💪

Hopefully this post will serve as an inspiration for you to begin exploring (and hopefully, learning) Rust 🦀!

Reproduce the benchmark Link to heading

The code and the data to reproduce these results are available on GitHub. Depending on the CPU your machine uses, your mileage may vary. Have fun and happy Pydant-ing! 😎

Data Quality in AI: Challenges, Importance & Best Practices, AIMultiple ↩︎

How Pydantic V2 leverages Rust’s Superpowers, FOSDEM ‘23 ↩︎

The Talk Python Live Stream #376, Pydantic v2 plan ↩︎

Sustainability with Rust, AWS Open Source Blog ↩︎

Global data centre energy demand by data centre type, 2010-2022, iea.org ↩︎